Imagine a doctor diagnosing a patient solely based on a complex algorithm, offering no explanation for the treatment plan. This scenario, while chilling, mirrors the reality of many machine learning models today. These powerful algorithms, despite their accuracy, often operate like “black boxes,” leaving us clueless about their internal workings. This lack of transparency can be a major barrier to trust, especially in critical fields like healthcare, finance, and law. Fortunately, a growing movement towards interpretable machine learning is shedding light on these complex algorithms, allowing us to understand their decision-making processes and build trust in their outputs.

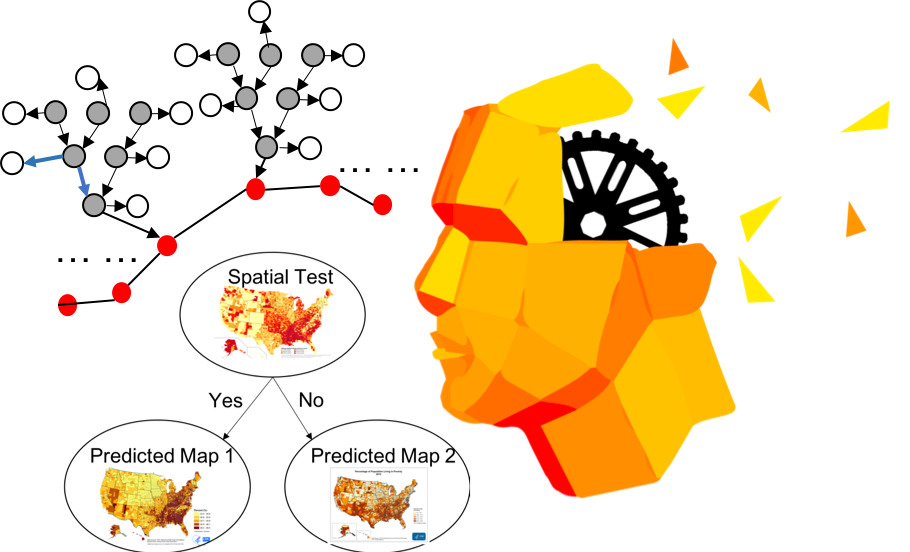

Image: www.jiangteam.org

This guide delves into the fascinating world of interpretable machine learning, focusing on practical Python implementations for unraveling the mysteries behind your models. We’ll explore how this field empowers us to gain insights from machine learning, ultimately leading to more informed and impactful decisions. Whether you’re a data scientist seeking to build explainable models or an enthusiast looking to understand the inner workings of artificial intelligence, this exploration will equip you with the knowledge and tools to navigate this evolving landscape.

Interpreting the Intricacies: Why is Interpretable Machine Learning Important?

The demand for transparency in machine learning is rising rapidly, driven by a confluence of factors. Ethical considerations are paramount: understanding the rationale behind an algorithm’s decisions helps us identify and mitigate potential biases. This is especially crucial in sensitive applications where fairness and accountability are non-negotiable. Additionally, interpretability fosters trust, allowing stakeholders to confidently rely on the insights generated by AI models. This trust is essential for broader adoption of AI solutions, leading to more efficient and effective decision-making across diverse industries.

Beyond ethical concerns, interpretable machine learning offers significant practical advantages:

-

Improved model debugging: By understanding the factors influencing predictions, we can pinpoint areas for model improvement. This helps identify errors, fix biases, and optimize performance.

-

Enhanced model explainability: Explaining the rationale behind complex predictions makes machine learning models more accessible to a broader audience. This facilitates collaboration between data scientists and domain experts, leading to more impactful and relevant insights.

-

Increased user trust: Transparency builds trust, paving the way for wider acceptance and adoption of machine learning solutions in diverse contexts. User confidence is essential for the successful implementation of AI-powered systems.

Unveiling the Black Box: Techniques for Interpretable Machine Learning

The quest for interpretable machine learning has led to the development of various powerful techniques. Let’s explore some of the most widely used methods, along with their strengths and limitations, empowering you to choose the best tool for your specific needs.

1. Feature Importance and Partial Dependence Plots

Imagine you’re trying to understand why a loan application was rejected. Feature importance analysis reveals the key factors that contributed to the decision. This method ranks features based on their impact on the model’s predictions, providing insights into the driving forces behind the model’s behavior.

- Example: A loan application might be rejected due to low credit score, high debt-to-income ratio, or a short credit history. Feature importance analysis highlights these factors as major contributors to the model’s decision.

Partial Dependence Plots (PDP) take this analysis a step further by visualizing how individual features influence the model’s predictions. They offer a clear view of the relationships between features and the model’s output, making it easier to understand how changes in feature values impact the predicted outcome.

Strengths:

- Easy to interpret.

- Widely applicable to a variety of models.

Limitations:

- May not capture complex interactions between features.

- Can be misleading if there are strong correlations between features.

Image: www.r-bloggers.com

2. Local Interpretable Model-Agnostic Explanations (LIME)

LIME takes a different approach to understanding predictions. Instead of focusing on the entire model, it analyzes predictions locally, focusing on the neighborhood of a specific data point. LIME constructs a simplified, interpretable model (like a linear regression) around that point, providing insights into the contribution of each feature to the prediction. This localized analysis allows for a granular understanding of the model’s decision-making process.

Strengths:

- Highly versatile, applicable to various types of machine learning models.

- Provides localized explanations, tailored to specific predictions.

Limitations:

- May not always generalize well to other data points.

- Can be computationally expensive for complex models.

3. SHAP (Shapley Additive Explanations)

SHAP, based on game theory concepts, provides a powerful framework for understanding the contribution of each feature to a prediction. It assigns a “Shapley value” to each feature, representing its relative importance in the model’s decision-making. SHAP values can be visualized in various ways, providing insights into the overall impact of individual features and their interactions.

Strengths:

- Provides a comprehensive and theoretically sound explanation for predictions.

- Allows for the analysis of both single and multiple predictions.

Limitations:

- Can be computationally expensive for complex models.

- Requires understanding of game theory concepts.

4. Decision Trees and Rule-Based Models

Decision trees provide an inherently interpretable model – they break down complex decisions into a series of simple rules. Each node in the tree represents a feature, and each branch corresponds to a different decision based on the feature’s value. Traversing the tree from root to leaf reveals the logic behind the prediction.

Strengths:

- Naturally intuitive and easily explainable.

Limitations:

- Can be complex for highly nonlinear relationships.

- Not as powerful as other models for complex tasks.

5. Model Distillation

In essence, model distillation involves training a simpler, more interpretable model to mimic the behavior of a complex, opaque model. The simpler model, while less accurate, provides a more understandable proxy for the complex model’s predictions. This process allows us to gain insights into the complex model’s decision-making without sacrificing accuracy significantly.

Strengths:

- Provides an interpretable model that closely mirrors the behavior of a complex model.

Limitations:

- May lead to a slight reduction in accuracy.

- Requires careful selection of the simpler model architecture.

Interpretable Machine Learning in Action: Practical Applications with Python

The power of interpretable machine learning lies not only in its theory but also in its practical applications. With Python, a versatile and widely-used programming language, we can implement these techniques to enhance the explainability and trust of our models. Let’s explore some real-world examples:

1. Healthcare: Interpretable machine learning can be invaluable in medical diagnosis, helping doctors make informed decisions by understanding the reasons behind an algorithm’s recommendations. By visualizing the factors contributing to a diagnosis, AI models gain trust and become valuable collaborators in the healthcare domain.

2. Finance: In lending and credit scoring, interpretable models can help banks and financial institutions make fairer and more transparent decisions. By understanding the factors influencing creditworthiness, they can ensure equitable access to financial services for all.

3. E-commerce: Personalized recommendations are the backbone of successful e-commerce platforms. Interpretable machine learning can help retailers understand the features driving product recommendations, leading to more relevant and engaging experiences for customers.

4. Law Enforcement: In criminal justice, interpretable machine learning can help mitigate potential biases in predictive policing models, ensuring fairer outcomes and strengthening public trust in law enforcement practices.

5. Environmental Science: Predicting climate change effects requires sophisticated models. Interpretable machine learning can help scientists understand the factors driving climate predictions, leading to more informed mitigation strategies.

Interpretable Machine Learning With Python Read Online

Interpretable Machine Learning: A Path Towards Ethical and Trustworthy AI

The journey towards ethical and trustworthy AI requires a fundamental shift in how we approach machine learning. Interpretability is not just an add-on; it’s a core principle that underpins responsible AI development. By embracing the tools and techniques of interpretable machine learning, we can build more transparent and accountable AI systems, fostering trust in their outputs and empowering informed decision-making across diverse applications.

Call to action: Explore the world of interpretable machine learning! Dive deeper into the libraries and resources available in Python. Start building your own explainable models, and share your experiences with the community. Remember, interpretable machine learning is not just a technical endeavor, but an ethical obligation, shaping a future where AI empowers us all.

![Cyclomancy – The Secret of Psychic Power Control [PDF] Cyclomancy – The Secret of Psychic Power Control [PDF]](https://i3.wp.com/i.ebayimg.com/images/g/2OEAAOSwxehiulu5/s-l1600.jpg?w=740&resize=740,414&ssl=1)